Is your A/B test broken? Here’s how to fix it

Welcome to the sixteenth edition of MAMA Boards, an AppsFlyer video project featuring leading mobile marketing experts on camera.

For today’s mini whiteboard master class, we have Nir Pochter, CMO at Lightricks, a creative app development company with 100 million downloads so far.

A/B tests is a common tool in the app marketer’s toolbox, but many still struggle with several key problems. From calculator error to variant overload, Nir discusses the top issues of A/B testing and how to solve them to keep your data accurate and performance decisions justified.

Real experts, real growth. That’s our motto.

Enjoy!

Transcription

Hey guys, welcome to another edition of MAMA Boards by AppsFlyer. I’m Nir, CMO at Lightricks.

At Lightricks, we have developed several apps with the goal of democratizing creativity, where creativity can be anything between editing your selfies and promoting your business.

We have about 100 million downloads so far and are growing quickly. As part of that growth, we have used lots of A/B tests, made some mistakes, and found how to handle these mistakes and issues.

So in today’s session, we’ll discuss these issues and how to solve them.

How do A/B tests usually work?

First, let’s talk about what an A/B test actually is. In A/B test, we want to test variants of elements, which could be anything like our ads, landing pages, app store screens, or even things inside the apps like intro videos or subscription screens.

We test for a specific criteria, either a click or an install or an in-app event like subscription, for example, or purchase. And then, we usually use some calculators behind the scenes because the necessary calculations involve some complex statistics.

These statistics tell us if one variant is better than the other and they tell us by which probability this variant is better than the other. The reason that we’re using statistics and accepting the probability of error is because the less mistakes we want, the more users we will need to sample, which is expensive.

So to repeat, we test between some variants, we use some statistics to calculate which variant is better, and we accept that the result is probabilistic and that there may be some mistakes. Since not all of us have a PhD in statistics, we usually use some predefined calculators.

What are some of the issues with using A/B tests?

So let’s talk about the issues with using A/B testing.

The first issue occurs when you use these A/B test calculators, a process known as P testing, and you keep checking whether you have a winning variant. Here’s what usually happens. Let’s say that you want to compare two variants, A and B, so you send 1000 users to each variant, check the results, enter the results into the A/B testing calculator, and check if you have a winning variant. Let’s define “winning” here as having a 95% probability.

If you don’t have such a probability, you send another 1000 users per variant and you check again and again and again and again until the calculator say that you can stop by saying that, by 95% probability, one of the variants is better. This is called p-hacking, or data butchering, and it’s hard to understand the math behind it, but long story short, it’s not doing what you think it does and may even give you false results.

If you want to believe everything I just said with your own eyes, the next time you have two variants and you know the probability of winning for each one of them, then perform this process: send 1000 users per variant, use the simulation to see which one won, put the result in a calculator, and continue. You’ll see that you don’t get the results that you think you should.

To solve this problem, what you need to do is use the A/B test calculator before you run the A/B test and find out how many samples you need to send per variant. Then, send the calculated number of users to each variant and only after you finish, use the calculator to see if you have a winning variant.

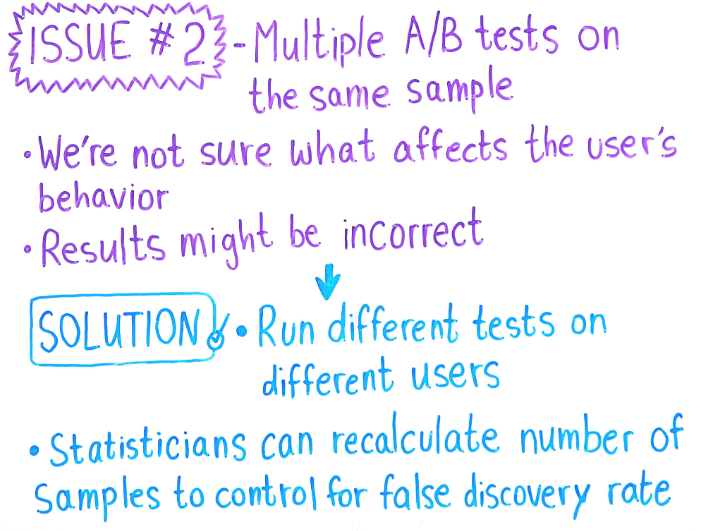

Let’s move to the second issue, which is similar to the first in the sense that we use calculators and get some results but the results don’t mean what we think they should mean. This time, it happens because we perform multiple A/B tests on the same users.

Sometimes, this can happen by mistake. Let’s say that the marketing team is running A/B tests on some ads and the product team is running some A/B tests inside the product; for example, the intro video. In this example, the same users will be exposed to multiple A/B tests.

It can also happen on purpose if, for example, the product team wants to run multiple A/B tests; for example, on the intro video, subscription screen, and the color of some buttons. So again, the same users are exposed to multiple tests and intuitively, we don’t know which of the tests affected the users.

If we just run the normal calculators, the results that they will give us might not be correct. So we need to fix them. The easiest way is to just never run multiple A/B tests on the same users, but it’s not always possible. If we have to run multiple A/B tests on the same users, what we want to do is call our stats folks and let them fix the results.

They might want to make some corrections, which are called correction for false discovery rate, and they will tell us to either be satisfied with, let’s say, an 80% probability of being right instead of 95%, or to run some more of A/B tests. This time, though, we might use 50% more users than we would have normally. After all that, our statisticians will calculate the results and tell us if one variant is better than the other and what the correct probability is.

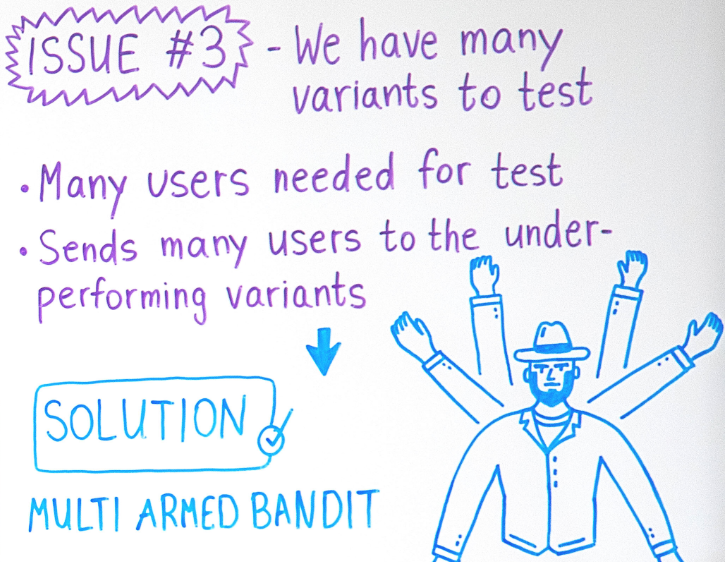

Let’s talk about the third issue which is not, unlike the previous issues, related to calculator error and getting the wrong results. This problem instead deals with wanting to test too many variables. It happened to us once when the product manager came to us and say, “Hey, I want to test 100 variables.”

We used the calculator and we found out that we had to bring about 50 thousand users per variable. Simply, it’s a lot of users to bring for a single A/B test, which could easily be a problem. Another problem is that when you have so many variables and some of them are different, usually some of these are not performing very well.

Bottom line

The bottom line is that you’re losing money by continuing to send more and more users to these variants just for the sake of A/B testing. The solution here is to consider using non-classical methods. Unlike classical A/B testing, we can use something like, for example, Multi-Armed Bandit.

The intuition behind these algorithms is that, instead of sending all users to all variants to increase the probability that we know which is better, they start by sending the same number of users to each one, but only a small number. Then, to increase the probability that we know which is better, they send users to the variants that are the best performing so far.

By doing so, we waste less money, only sending users to better performing variants, and usually, these algorithms give us results much faster than classical A/B testing. The drawback is that we don’t have a formal guarantee that, indeed, the winning variant is better than the losing variants in such and such probability like in classical A/B test methods.

So, A/B testing is a really important tool and we should use it, but when using it, we must make sure that we use it correctly. So before running your A/B test, use the calculators to calculate the sample size. Also, don’t use multiple A/B tests on the same users and if you do, have your stats folks fix your calculations. And if you have many variants, consider using Multi-Armed Bandit algorithms instead of the classical tools.

That’s it for today. If you have questions or comment, please use the comments box below. If you want to watch more MAMA Boards, please use this link. Thanks for watching. Bye bye.